In a previous post, I outlined some of my thoughts on what makes Linden Lab both good and bad in terms of what they are doing right wrong versus (more importantly) what they do right. As a result I received a number of comments on and offline taking either position, but the most interesting comment was from OpenSource Obscure:

Interesting piece, but I stopped reading at

"Not that I expect any of it to be fixed or implemented, because clearly Linden Lab has more important things on their plate to work on, like adding a Facebook button".

I just don't think things work that way.

I also argue that reiterating this idea (ie, implementing a small specific feature keeps completely different things from being fixed)

is just bad for our understanding of the Second Life platform development.

You could hear this same song recently with regard to XMPP:

"they refused to fix group chat because they had to add avatar physics".

When you frame the debate this way, you can't go much further

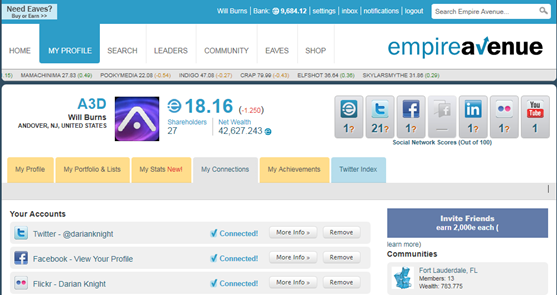

I’d like to take this moment to clarify a bit more as to what it is I meant when I said that while the more important things will likely go unimplemented by Linden Lab, there exists a short-term thinking in place that gives priority to things like adding a Facebook “Like” button. For those who decided not to bother reading the rest of the prior article, I’d suggest taking the time to read this in entirety before making a comment. It helps to understand the entire position prior to inferring what I mean when I say something.

Toward the latter part of the prior article I outlined that many of the innovations inherent in the Second Life viewer are a result not of Linden Lab but of the community through TPVs or in-world innovations and building. Whenever Linden Lab drops the ball, or ignores the users themselves, we see these types of innovations implemented through the TPVs and community when the capability allows. However, there are quite a lot of innovations that were never implemented or were implemented and quickly removed; never to be spoken of again. Those are the sorts of innovations which Linden Lab has access to (like the native Windlight Weather) which they simply chose to forget they even had.

An obvious assertion, that really needs no further explanation, but for the sake of argument I will outline further.

While the management of Linden Lab continues to play musical chairs with their vision and implementation for the company, the direction and focus of the company has taken drastic turns over the past few years in which on the surface resemble a flavor of the month approach. We’ve seen the focus of Second Life swing wildly to the polar opposite of the community to focus on the commercial aspects through the direction as set by Mark Kingdon with the SL Enterprise solutions, and now with Rod Humble, we see an oversimplification of the interface and approach which would be the polar opposite.

While it’s true that the new user experience needs to be balanced and friendly, taking away the things which substantially encompass what makes up Second Life as an experience and dumbing it down to a glorified chat room isn’t a solution; it’s insulting. Taking away the inventory, ability to utilize a Linden balance, or meaningfully interact with the environment in the myriad of ways that have become a staple of the total experience seems draconian and misguided.

As I had stated in the prior article, I’m actually a fan of the Viewer 2 experience, but not for the totality of what it’s offering. No, I’m a fan because of the technical achievements which have been introduced such as Shared Media, which I’ve waited to see implemented for a few years ever since the day I downloaded and played with the uBrowser experiment. When I said time and again that we’ll all be using Viewer 2 whether we like it or not, and that we’d better get used to it, I was speaking from the point that no matter how god-awful Linden Lab botches their official viewer and runs in the opposite direction as the wants and needs of the majority who use the system, those deficiencies will be shored up and made much better in the TPVs by the dedicated volunteer coding community.

Shadows, depth of field, better machinima camera settings, and countless other innovations came out of the community through independent efforts and not because they came first from the official viewer. In contrast, it is these types of innovations which come about from the community first and years later might be implemented from the Linden Lab side, but not without some amount of patting themselves on the back as if they’ve just reinvented the wheel. Mesh is a fine example of this process, whereby the ability to upload and use mesh objects has been around for years, and only recently has Linden Lab decided that it’s something to implement officially (or at least try).

I can just as quickly cite their own acquisition of Windlight in 2007 from WindwardMark Interactive, whereby even this innovation was only half implemented and called a full feature and milestone. With that acquisition we saw the ability to change the atmosphere settings locally, change the clouds, color the water, and tinker around with the lighting, but the most obvious thing in that acquisition was put on a back burner and forgotten even to this day – the ability to allow the actual region and parcel owners to make settings that will change the user’s atmosphere globally to further create the intended atmosphere desired by the creator. Instead of the obvious global settings being a staple in the official viewer, it was relegated to the back burner to be partially implemented in Phoenix with some degree of success.

Having the technology versus actually using it are two different things.

There is also the unspoken, and most often unknown, ability in Windlight that allows natively for full scale weather. It is part of the acquisition, and was implemented hastily then removed. Instead we see community in-world solutions whereby objects drop thousands of scripted particles from the sky in a vain attempt to recreate this want and need from the community, a feature that is literally built into the Windlight system natively and likely uses the View Port for the effect versus attempting to render thousands of particles in world and causing stress on the clients and maybe the servers themselves for having to rendering thousands of particle objects in-world versus simply drawing them to the local viewport and saving the resources overall.

The often heard argument against the native weather in Windlight was that it was raining inside of buildings and structures where it shouldn’t be, which is actually yet another blatantly obvious thing being pointed out that was highly needed but never implemented –

User Created Zones. These zones are phantom objects, likely restricted Megaprims that define a 3D Space by which the object holds the ability to alter the user experience, such as lighting (maybe it’s darker in your house than outside?), restricting particles that are outside the zone from entering the zone (would stop that rain and snow, as well as outside interference from some griefer tactics), managing VoIP conversations to make voice within a zone private to those inside the zone but not heard outside of it. Also, the ability to create user generated zones and restrict how many prims may exist within it, and who has building rights within that zone would be a god-send to apartment complex owners and malls. It would essentially free the users of the limitations of 2D parcels, and better utilize three dimensional space – which to me seems like a no-brainer in a virtual environment.

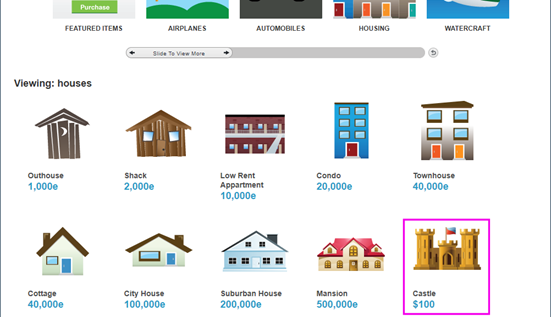

I’ve seen user created zones in other open ended virtual environments like ActiveWorlds and it’s absolutely phenomenal – right down to the ability to set the zone to act like water or alter the gravity within it… because you might want to jump into a swimming pool without remembering to purchase and wear a swimming HUD or sit on poseballs to pretend.

First Generation Tower of Terror in Active Worlds

Imagine what zones and an actual interface to create particles in Second Life would allow? Which brings me to yet another spare part of Second Life.

We have a Build Menu in Second Life, and the ability to make Particles, yet the two are mutually exclusive. There exists no tab on the build menu to define any aspect of a particle emitter when building, short of custom coding the script yourself or buying a pre-made script to drop into the object. All of the particle effects you saw in the video above are made with the build menu in Active Worlds, to define that the object emits particles, and there is a point and click list of options and numbers you can define, including the actual image used for the particle or if the item is emitting objects as particles. I won’t even get into the point that particles run locally per user and do so at a framerate that would put Second Life particles to shame. Again, we see an instance where the community has picked up the ball where Linden Lab has dropped it, in that if you want a Particle Creation GUI, you have to buy one from Marketplace. Clearly, this is important if the actual users are supplementing the lack of obvious ability via the creation of 3rd party HUDs for sale.

From this point we can also move onward to talk about the implementation of actual Reflective objects and Mirrors, another useful innovation that was tested early on and then retracted – never to be spoken of again.

It’s almost like it never existed…

With Viewer 2 we saw a drastic reimagining of the interface and viewer, one that is built to resemble the common web browser in functionality, yet to the new user which sees this layout, they will likely wonder why an entirely different interface exists for the built-in web browser versus actually using the viewer layout as it is natively for a web browser as well. We have an address bar at the top now, which to a majority of users serves no real purpose and is ignored. The obvious was again overlooked in that there is a disconnect in what the viewer looks like and what the intended functions should be. Allowing actual web addresses to be entered into the address bar would have been a no-brainer since that’s the first thing you’d think an address bar up there would do.

So how would an address bar in a virtual world viewer function?

That’s where the 3D Canvas comes into play. In programming it is fairly trivial to create an additional rendering canvas and layer them with only one in view at any given time. In this case, we speak of the 3D Rendering canvas used in the viewer to display the 3D world around you, as well as the Web Browser canvas which is already implemented and seen by every single user of Second Life when they first log in. Instead of using that web canvas natively in the viewer, we are greeted with a separate window as a web browser overlaying the 3D canvas – which for all intents and purposes makes it pointless to use and I suspect is the underlying reason why most people would choose to have web links open in an external browser.

Furthermore, the very nature of a dual purpose canvas for 3D and 2D Web would facilitate the usage of web addresses in conjunction with 3D locations as part of the region and parcel properties, and would severely augment the ease of teleportation. It is much easier to find a location in a 3D environment if you know they have a normal website versus trying to track down a complicated SLURL and enter that into the address bar. This doesn’t, however, invalidate the usage of Landmarks in-world because those are a staple and very useful.

If Linden Lab were really interested in tying the web into Second Life as a native function, they’d have noticed this very basic and obvious thought process the moment they received their first preview of the Viewer 2 interface. However they did not, but as an aside I’ve already posted a JIRA outlining the entire functionality and mock-ups as to how such a thing would work, step by step, and also why such functionality is beneficial (if not trivial, since much of the functionality exists built-in but goes unused in proper context) [JIRA #VWR-22977]

Wouldn’t it be nice to be able to type an actual web address into the Viewer 2 address bar and get the website loaded on the full canvas? Better yet, if a region or parcel has their web domain address in their Parcel Properties, wouldn’t it be great to get a notification that the web site you are visiting has a related location in Second Life and ask if you’d like to teleport there? How about the number one reason Linden Lab should have done this by now: Seamless and native integration of the SL Marketplace into the Viewer.

If you’re building a viewer that resembles a web browser, this is essentially what a new user is expecting it to act like, taking into account the addition of 3D environments.

I’m citing half finished and wholly ignored features and usability fixes that seem like common sense and date back as far as 2007. But instead of working on these sorts of things, we get a nice email from Linden Lab telling us about how they’ve essentially bastardized our profiles (as well as the viewer even further) and would love for us to link it up to Facebook and other social media.

I will be the first to admit that Linden Lab isn’t a total screw-up, because they did manage to improve the teleportation time and a number of low level fixes. Despite this, the high level lobotomizing of the viewer experience as well as blatantly ignoring the obvious things that the viewer actually needs or should have irks me to no end. It’s as if their priorities are horribly skewed…

There is such a focus on the machinima community that it’s starting to piss me off, quite honestly. I’m not against the machinima community one bit, and I think the work they do is often times indescribable and breathtaking – but since joining the SL Press Corp the only thing I’ve seen in my email regarding the SL News is machinima related – as if that’s essentially all the news in Second Life that is actually important to put out on the group notice.

You know what would make all of the machinimatographers drool in unbridled joy (not to mention the other 90% of the registered accounts that seem to be getting what they want from TPVs instead)?

Tell them they can now create controllable zones for lighting, atmosphere, etc. If you’re having trouble figuring out how to go about that, go talk to ActiveWorlds and ask them how they managed to pull it off in entirety 7 years ago.

Tell them they now have access to Windlight Weather Effects. It’s only been sitting around for five years now, waiting to be implemented from part of a package they acquired in 2007. No rush or anything, Linden Lab, I’m happy to know I now can link my profile to Facebook in the meantime.

Tell them they now have actual Mirrors and reflective surfaces again instead of making them fake it against the water. Again, no rush or anything… it’s not like the dynamic reflection shader isn’t already in use for the water, proving it works just fine when enabled.

Tell them the advancements in TPVs are being ported over to the official viewer – start with working with Kirsten’s Viewer team and Phoenix to make that happen. Because it’s not like the community is leapfrogging the paid coding army of Linden Lab, implementing the things they wanted and asked for years ago.

Tell them that you actually realized the importance of ease of access and creation for Particle Effects and have implemented the toolset into the Build Menu to facilitate amazing effects and quicker turnaround, while leaving the ability to write the script for a particle effect should the users want absolute tweaking ability and control over the end product.

Take a hard look at how much Linden Lab has lobotomized the viewer and instead think about ways to make it coherent again. Linden Lab created a viewer that pretends to be a web browser in the guise of a 3D virtual environment viewer, and the result was that it doesn’t seem to do either very well. So instead of try to make it more reasonable, they’ve decided to just start stripping away all the things that actually make the experience what it is, leaving the new users thinking that Second Life is just a glorified 3D Chatroom.

It’s time to actually address the long standing issues, and think about how to really pull the experience together properly. When I say that Linden Lab likely won’t be doing these things anytime soon, because they’re too busy implementing a Facebook “Like” button – it’s 100% true. It’s about what they believe is actually a priority, and right now it’s “Fast, Easy, Fun” versus “Finish what you started in 2007 and then work your way forward”. This of course is evidenced from the recent announcement that users actually voting on things in the JIRA didn’t actually make a difference because Linden Lab was ignoring the popular votes – which is the exact same as admitting they are ignoring the community and what they want.

Their priorities are skewed, plain and simple. Chasing after the current buzzwords of social media, trying to make a Second Life viewer that works in a web browser (and eats 1GB of traffic an hour), slicing and dicing the new user experience into something that resembles nothing like the actual experience that is Second Life.

I hate to be the bearer of bad news, but that is exactly the way the process works. They are a company caught up in the ever changing whims of the management, and it shows in the nearly incoherent Viewer 2 and company vision. It is a viewer that is now a hop and skip away from becoming The Sims with multi-user chat – and I take great offense to that.

While I applaud what Linden Lab has done, begrudgingly ignoring the massive amount of screw-up involved and scraping to find at least a nugget of positive from the mountain of fecal matter it’s buried under, if you want to know where the real innovation actually is – it’s not at Linden Lab.

I’ll turn your attention to the community and the brilliant Third Party Viewers they maintain. The user generated content that seeks to make up for the forgotten tasks that Linden Lab continually ignores while chasing that social media dream.

When the community is doing a better job at making a viewer for a product than the company that actually makes the product is doing, you start to understand that if you start thinking your community can be put off while you chase your tail for a few years, they’ll all just stop listening to you and wander off to make a better experience themselves.