An initial Metaverse Blueprint. Beyond #SecondLife

Today’s post adds something of interest to the current discussion concerning the Metaverse; however instead of just going on about whether or not what we already have constitutes a Metaverse, or debating whether or not we’re looking at existing systems today as a possible path to that Metaverse, I’d like to offer a very different approach.

What I’ll be writing today circumvents that particular set of topics and gets right to the root of the matter by addressing what a Metaverse actually will accomplish. Call it a rough draft or a blueprint, these are the things we should be focusing on today if we ever want to realize that dream which is the Metaverse.

Bottom Up

Straight out of the gate, I will say that our approach thus far has been lackluster. This isn’t anyone’s fault in particular, we simply have our priorities askew. I applaud =ICAURUS= for taking some initial steps to go about this, but immediately I’m throwing the flag out onto the playing field for a penalty.

Addressing whether or not the next system has the appropriate rendering engine is a top down approach. We’re talking about the what instead of the how. This is a dangerous misstep and is usually the first mistake these endeavors make when attempting to build the Metaverse. For instance, let’s look at Linden Lab. It was built in the beginning under an assumption that they never really expected it to be as popular as it was, and so that philosophy dictated some decisions up front which came back to haunt them later on in the lifecycle.

Such thinking in the beginning usually leads down the road to situations where we’re talking about patching an outdated system. So let’s think about this from the bottom up instead of trying to build the skyscraper starting from the 100th floor and working our way to the basement.

Root Prim

To begin, let’s ask a very basic question -

What can the Metaverse actually do; or more importantly what is it actually accomplishing?

The simple answer to this is that the Metaverse is a Spatial Representation of Data, wherein the ecosystem supports many modes of interaction from local nodes (single user) to multi-node (many users) within a contiguous space.

The next question becomes -

What data can this system represent?

In order to answer this we need to start with an existing context. We could very well just invent new types of data, but it’s far simpler to begin with existing forms of data and go from there. So as a basis, let’s say that the most important foundation for a forward looking Metaverse client is that it begins as a functional Web Browser.

This becomes our metaphorical ground level in the skyscraper. From here we build upon that foundation to the top of the skybox, but for now we need to employ the KISS method (Keep it Simple, Stupid)

The Metaverse should be able to handle the basic standards that an existing Web Browser today can handle, and do so natively. Remember, we’re building an HTML5 compliant Web Browser first (and a damned good one). I cannot stress that last point enough, because the built in web browser for Second Life is horrible. In this proper context, the web browser becomes half of the integrated experience, and so you can’t afford to screw it up or treat it like an afterthought.

Ok, so why are we doing that instead of just building the 3D Metaverse up front?

It’s safe to assume that the foundation is a Web Browser simply because it is natively a 2D context of the same data that we would like to represent Spatially.

Now we start asking the important questions.

Let’s say we now have our HTML5 complaint web browser. What does it support overall? It can handle FTP, HTTP, HTTPS, image rendering, audio playback, video playback, animated images (GIF/APNG, MNG) and of course this wonderful thing called Add-Ons and Plugins.

As of this moment, an HTML5 compliant web browser can actually excel better at the obvious stuff than the best of our Virtual World clients. Now that we can acknowledge that, I think we’re in a better position to remedy this issue up front.

Modes of Operation

I’ve gone over this in a rudimentary fashion within the confines of the Second Life JIRA, but there is far more context than I let on for why I submitted it as a feature request. VWR-22977

Built-In Web Browser Uses New Canvas Rendering Layer [Not New Floater Window] is a testament to just how far in advance I was thinking before submitting things. To this day it hasn’t been reviewed, nor did I actually ever expect that it would be. All I really wanted was to make certain it was on public display.

So here’s the bigger picture -

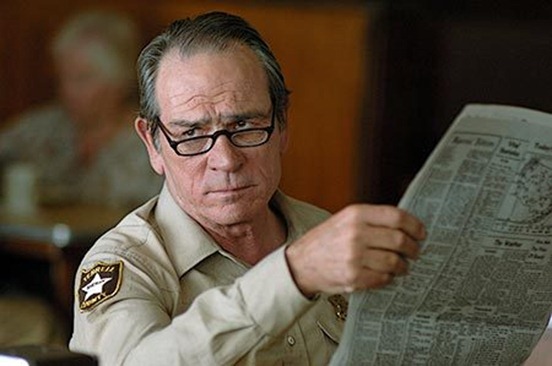

When you’re building a new Metaverse client from scratch, your first view looks something like this:

It doesn’t look exactly like that, but it’s the best reference image I’m going to provide at this time. The most important aspect is in how our modes of operation behave, which can be simplified to what changes I’ve made to the top navigation bar -

The most noticeable thing about the change is two-fold: First, and foremost, the client acts like a native web browser wherein the rendering canvas uses the entire space which is now reserved for the 3D Rendering Canvas. In effect, we’re simply using two rendering canvases wherein only one is in view at any given moment while the other one is paused.

On the far left is a new type of button which does not exist on a web browser today, despite the other buttons being common to both a virtual environment viewer and a web browser (back, forward, stop, home – and I’d like to state now a reload button for area rebake)

So here’s the deal… this simple foundational change in the way things are organized from the get-go is enough to fundamentally change how we perceive the Metaverse and (interestingly) the entire existing Web.

Secondly, what this change implies is far greater than what was explicitly mentioned in the JIRA that I filed, but anyone who is savvy to give this much thought begins to see the implications this would have.

For instance, the default for a web server is index.htm or index.html and that’s how we know we’re at the “home page” location on a system. With a Multi-Mode Metaverse client, we gain something from this operational change through what can be said is index.vrtp or whatever extension you’d like to call it.

It’s your answer for universal Hypergrid teleportation. When a web address becomes capable of serving as a Metaverse teleport (representing a spatial location within the Metaverse) you’ve just opened up a Pandora’s box of opportunity. Instead of remembering a long SLURL, or HyperGrid teleport string, we can embed that as Metadata in a type of XML format on the root of a website along with the index.htm

That Metaverse Index File is seen by the client as a location with further XML data attached (like owner, description information, etc) and we can convey that in the client via a notification. When you visit that website with a standard web browser, you just get the website. But when you visit that same website (Say Google.com) the Metaverse client sees Index.htm/html for the Web Browser portion of the client (consider this your dynamic brochure for a location), but it also looks for Index.VRTP (Virtual Reality Teleport Protocol).

In this instance, let’s say you’re browsing the Web with your new Metaverse Client. You type in MetaverseTribune.com and your HTML5 complaint web browser loads it up just like you would expect. Now, let’s say they had an index.vrtp on the root directory as well as the index.htm?

You’d get an unobtrusive notification saying that the page you are looking at has a location, and you may click to go there in the Metaverse. Or you may turn that notification off entirely and simply use MetaverseTribune.com in the 3D Address Bar instead of an SLURL/Hypergrid Teleport. If they have the index.vrtp on that server, you’ll get teleported to the location, and (as a really cool side effect) when you arrive, the location can have a website automatically loaded in the web browser portion as part of the location.

How about just an icon in the address bar that denotes the website has an available location in 3D and by clicking that icon in the address bar you will get the teleport? The same could go for visiting a location that has a website attached to it – an icon appears in the address bar that when clicked opens the homepage as set by the location owner.

Legacy of Advancement

This is just one reason to start with our web browser context first and translate it to the virtual world, but how about other contexts? How does our Metaverse client handle a FTP connection?

This is why we’re basing things first by building a Web Browser, because then we’ll start translating how the web browser handles existing standards and protocols into our spatial environment, all while not drastically changing the initial Web mode of operation. This way, if the new user can use the web browser, they will be right at home with the Metaverse view.

Back to the FTP connection… how does our Metaverse client handle that context?

This is why we’re framing this as multi-node and local-node operation. In an FTP scenario, it would dynamically generate the environment to represent the files (as the objects within the environment) and folders as rooms by which you can walk around in. This also works for parsing local directories (just in case you felt like walking around your Hard Drive).

Now we’re asking the obvious “Why the hell would we want to do this?”

Because I should also be able to attach hyperlinks to objects in the 3D space, and if that hyperlink is an FTP mode, then it is treated like a portal into a local-node space. I’m not breaking the metaphor of interaction and this is the most important part of immersion.

In an FTP or Local-Node context (your hard drive) the owner can set a similar VRTP descriptor XML in the root which defines whether or not it is a singular node (not multi-user; as in, I and many others could see it but not each other) or multi-user (multi-user space). In the context of the web, that’s a hell of a lot of people suddenly traversing dynamic spaces online (potentially 6 billion virtual spaces), and right now we shouldn’t have to worry about everybody running a special server to handle it. See the basic references at the end for the reason why.

This is why the architectural foundations of this are far more important than what rendering engine we’re using. While the rendering engine is important, it pales in comparison to the “how this thing operates” versus the “what it looks like” eye-candy portion.

In the structural portion, we’re looking at a hybrid decentralized system of operation. In the dynamic modes, we’re connecting to each other in a peer to peer fashion. So you wouldn’t necessarily have to be running a special server to have an environment. A standard Web Server right now becomes a potential Metaverse Space just by adding those XML descriptors, or using in-world Hyperlinks to those dynamic spaces.

What about using media from existing servers online within the virtual world? Instead of uploading a file to an asset server, maybe you already have the media on a server of your own? Why not have the ability to state a web address as the file location?

Now we’re talking native context for existing MIME types, which already have the storage part down pat. Speaking of which – wouldn’t it be great to natively open PDF files, Images, Audio, Videos, and more in the Metaverse? Again, the web browser does this without even thinking twice… but the current generation of virtual world viewers simply.. well, they don’t address this very well if at all.

Hopefully you should see why starting with an HTML5 web browser as our foundation makes sense?

How does the Metaverse translate in 3D what a Web Browser handles effortless in 2D?

Using the Web Browser as our Metaverse Checklist.

After we are comfortable handling the translation of existing data into our Metaverse context, we can move on to Metaverse specific contexts which need to be addressed.

For instance, a universal passport/avatar. Translation of Metaverse currencies via Exchange Rates. A Metaverse Location Crawler to work as a Search Engine. A marketplace system that is built into the client – which actually becomes damned easy when it has a native web browser context. Decentralized Asset Storage systems that are secure. Authentication. Building an SDK and licensing it.

The last item on that list is the least obvious. It’s not mandatory but probably a good way to monetize the work required to build such a system. Setting up the spaces requires no license per-se but if you want to build a new product or plugin with/for it then there is a license. Think of it like a Metaverse App Store. Or just monetize a percentage of the apps themselves and make the licensing and SDK free… whatever floats the steampunk airship…

There is a lot to solve here, and the rendering engine is probably the least of our worries. As a matter of fact, if this was properly built – then we could substitute any modern graphics engine on top of our foundation and it would work.

Plugins and Add-Ons

Just like a modern web browser, the Open Metaverse client should support an extensible plugin architecture for add-ons and outright browser plugins. Maybe the Web Browser portion just handles Chrome Extensions natively, but the Metaverse mode has its own add-ons architecture and maybe an SDK for full blown plugins to extend the viewer capability much further.

This concept of building the base system and then allowing a plugin architecture and add-ons is not new. Web browser already do this as a defacto, and even if we were to look back at Cyberpunk culture, (Shadowrun) we had decks where there were slots to load custom “apps” which extended or improved the custom experience.

I’m going with the fictional Metaverse concept here, and our current generation of actual Web Browser as proof this is the right approach to our future Metaverse system. Modular and Extensible.

Open Metaverse Foundation

Should be the equivalent of the Mozilla Foundation in regard to the Metaverse. Time to shake things up and become cool again.

Basic References

Let’s say you’re an aspiring coder (or team of coders) who are looking to tackle this next step of the Metaverse… below I’ll list some exceedingly helpful pointers for reference. These links should give you a head start for the foundation aspects:

P2P Architecture – Solipsis Decentralized Metaverse. It offers Area of Interest Networking, and a peer to peer method for handling larger amounts of people. When the system starts scaling upward and becoming popular, you’ll be glad this is in your back pocket to load balance against. This is likely the diamond in the rough that would allow the entire pre-existing Internet to be turned into dynamic multi-user spaces – ie: Instant Metaverse. The website is locked down tight and may never return – however I do happen to have a copy of the source code and research paper archived if anyone is interested.

Asset Servers – I could suggest something like Owner Free File system. It’s a multi-use blocks storage paradigm (Brightnet) that would allow the budding Metaverse creator to balance existing caches of users against having to centrally store it all. Saves a lot of redundant bandwidth and processing.

Feel free to make this a community reference for ideas on where the pieces of the overall puzzle lay for aspiring coders. Add your own references to the comments below.

This is only a very rough stream of consciousness post, and shouldn’t be taken as an “entire” proposal. The only thing I wished to convey was the proper starting context and metaphor of interaction so we (as a community) could start off on the right foot.