Give me something to believe in: Is #Euclideon Unlimited Detail a hoax?

Every revolutionary idea seems to evoke three stages of reaction. They may be summed up by the phrases:

- It's completely impossible.

- It's possible, but it's not worth doing.

- I said it was a good idea all along.

- Arthur C. Clarke

Where to begin?

Recently I made a post on Google+ about the future of graphics technology, citing both the Outerra and the upcoming Euclideon Engine. In some regard, they both represent an interesting crossroads in the industry where one company (Outerra) is using the polygon methodology mixed with procedural systems, while Euclideon is using a variant of a voxel based system with procedural methodologies. What transpired was a really interesting debate between myself and one of the staff from Outerra (Brano Kemen) who falls somewhere between the first and second category of debate as listed above from Arthur C. Clarke.

His insistence that Euclideon was likely just a hoax wasn’t what raised my eyebrow, but instead it was the reasoning he was using. In a typical fashion, he put forth his best arguments against Euclideon from the perspective of a current generation technology programmer.

The obvious stance being – Well, this isn’t a new idea and it’s been tried before, but nobody has managed to pull it off like Euclideon claims they have.

I’m a little perplexed at this statement because it’s really no different than saying:

Thousands of people in the past have tried this and failed, therefore it’s not possible that somebody will come along and succeed.

At best, that’s a bit of faulty logic.

Generally speaking, if you’re coming at this from the perspective of what you already know in the industry, then the Euclideon engine looks like nothing more than witchcraft and magic. Of course, Arthur C. Clarke also notably commented on this phenomenon as well:

Any sufficiently advanced technology is indistinguishable from magic.

– Arthur C. Clarke

Past Tense Future

I've done a few articles on this blog awhile back concerning the Euclideon engine and what I believed at the time were the general mechanics of the engine which made it so powerful (and unique). For the most part, the more I research into this system and read (or listen) to the details that are made available, the more I am convinced it is not a hoax. If you would like to read the original posts on this subject, feel free to hop over to Quantum Rush and Quantum Rush [Redux] for some perspective.

To be fair, the Outerra engine is marvelous in its own right and has definitely earned my respect for what is being accomplished, but in the grand scheme of things, it’s like praising one technology at the height of advancement but knowing it’s a sort of last hurrah! before the sun sets and a new age approaches. I say the same thing about Crytek as well, and even they believe that technology like Euclideon is not a hoax because they tried to import a point-cloud model of a tiger during their research on the Crytek engine.

So in the grand scheme of things, at least one company thinks it’s possible and they sure as hell are bigger than Outerra. That being said, Crytek also acknowledged that they didn’t manage to crack the secret recipe for point-cloud data approaches and went with the approach they have now in the Crysis 3 engine.

Outerra Engine in alpha stages. Ground is procedural, but models are polygon.

In the beginning, was the Pixel

Simply put, the Euclideon engine is not a typical voxel engine. It may use similar foundations from this technology, but that’s about where the similarities end.

In order to wrap our heads around the claim of Unlimited Detail, we first start with the idea that your screen only has a certain amount of pixel space. Let’s say this is your screen resolution and go with 1900x1200 as a default resolution (which is mine).

Now, the first thing we ask is how many pixels is that on the screen?

It’s really a math problem, simple multiplication:

1900 pixels wide by 1200 pixels height.

This math problem yields us an answer of:

2,280,000 pixels on screen.

We can display photographs on screen at 1900x1200 resolution and that still image looks, well, photorealistic. But in a 3D Engine, photorealistic seems to be a word reserved for rendering farms and a year of processing.

In the case of Euclideon, they realized something that I don’t think the rest of the industry realized when dealing with point-cloud data models. Typically we’re loading the entire model into memory and shuffling it around, and that actually does get computationally expensive, but we’re talking about also moving around all the little digital atoms in the model that you can’t see, which is about 90% of the model at any given time.

Why exactly are we shuffling around 90% of a model we can’t see? That means we’re wasting 90% of our processing power doing stuff nobody will notice.

The obvious question then became:

What can the user actually see?

The answer to this is clearly:

They can only see what’s in front of them at any given moment, or whatever pixels are lit up in their resolution.

Well, this gives us something interesting to think about. If the pixel space 1900x1200 is the maximum amount of pixels on screen at any moment, then the only thing we need to know from there is what color those pixels are.

This is just one half of the equation, though. So we set this thought aside for a moment and ask:

How do we figure out what the user can see out of those 2,280,000 pixels on screen?

The World of Tiny Little Atoms

Setting aside the answer of 2,280,000 pixels on screen, we then look to figure out how we deliver only what will satisfy that prior question of what of the models can be seen in that screen space.

This is the point where another explanation from Bruce Dell in a recent interview comes to mind where he explains that current systems are pulling the entire book (model) and processing, yet what happens when you index the individual words in the books properly and make them searchable?

In a point-cloud model, we’re working with little digital atoms, so the model file itself is comprised of atomistic descriptions. The goal, then is to figure out which of those digital atoms in the model file are visible on screen at any given moment and ignore the rest of the model file.

So when you take a model of 500,000 polygons and convert it to the Euclideon point-cloud data format (which for all intents and purposes likely also indexes those points in the file) you end up with a high resolution point-cloud model where every point is indexed for searching. This is where we get the beginning of the second part of our answer for Euclideon and where the typical voxel engines stop.

The Euclideon engine is said to work a lot like a search engine, where the only search query is:

What pixels on the screen correspond to the point-cloud data in the model files that this user can see?

When we do a camera check in-world to see what is in front of the user, the engine likely returns the models it sees, and then the individual points from those files that are visible to the user within the screen space (again, 2,280,000 pixels).

Knowing this information, we ask the point-cloud data models up front to return only the points within it that correspond to the pixels on screen. Since the point-cloud models are pre-indexed to begin with, and the points (atoms) inside of it are individually indexed inside the file, only the points in the model that match the search query are returned as an answer to the engine, ignoring the other 90% of the file up front.

What we are likely left with then is something like this in the scene:

Clearly this is a good start, but we have quite a lot of empty space in between those pixels that are unanswered from just the points in the model files that are stored. Obviously, we wouldn’t want to store the point-cloud data at absolutely full resolution (because the storage then becomes the issue), so there must be a way to fill in the blanks on this point cloud scene.

Luckily for us, we have an answer.

Algorithmic Interpolation

More or less, the idea of what happens in between the points on the point cloud model are likely left to an algorithmic interpolation sequence. If we have two points and there is space between them, then the algorithm takes the average of the two sides and creates a new point algorithmically generated.

This isn’t a new idea, but when applied to something like voxels it gets interesting. The closer you get to the atoms in a voxel display system the more they enlarge and even start generating algorithmic interpolation to fill in the spaces, creating detail where there wasn’t before.

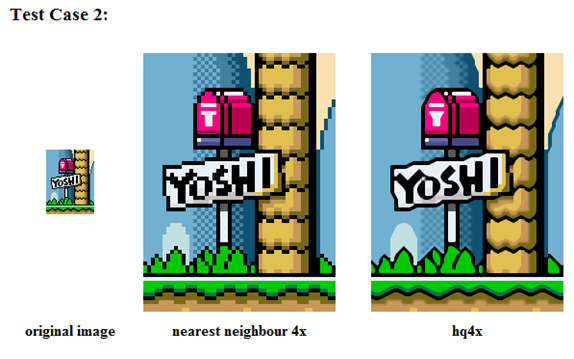

This is a common technique in console emulators to upscale 2D sprite art to modern HD screens, so Mario as sprite art can look good when made bigger on your high definition computer screen. One of the more popular algorithms for this 2D Sprite scaling is the hqx algorithm, and is available as part of most current console emulators.

In image processing, hqx ("hq" stands for "high quality" and "x" stands for magnification) is one of the pixel art scaling algorithms developed by Maxim Stepin, used in emulators such as Nestopia, bsnes, ZSNES, Snes9x, FCE Ultra and many more. There are 3 hqx filters: hq2x, hq3x, and hq4x, which magnify by factor of 2, 3, and 4 respectively. For other magnification factors, this filter is used with nearest-neighbor scaling.

Procedural Methods

In much the same manner as Outerra makes use of procedural methodologies for their terrain, Euclideon seems to make use of procedural methodologies for a majority of the engine as applied to specialized point-cloud data that is indexed efficiently.

It’s like going to Google in order to search for something. Naturally you don’t expect Google to start at the beginning of the Internet and go through everything in a linear manner looking for what you searched for?

No, instead Google crawls the Internet and indexes the content which is why when you search for something it skips 90% of the Internet and brings back just what you searched for in a few milliseconds.

Therein is the secret for Euclideon. The typical 3D Engine is the equivalent of having to travel from the beginning to the end of the Internet to find what it needs, whereas Euclideon is the equivalent of skipping 90% of it and bringing the results in milliseconds. This, in turn, makes Euclideon a highly optimized graphics engine, and the indexing of the files happens when you’re converting the high resolution polygon models into the Euclideon point-cloud data format (the equivalent of Google crawling the Internet)

The former of the two approaches uses up quite a lot of processing power with computation that is wasted because 90% of what it’s dealing with can’t be seen by the user to begin with, while Euclideon frees up that computation for other things.

Under the traditional methodologies today, the graphics cards are just sufficient enough to handle the polygon detail and all the computations, but under the Euclideon methodology, that same graphics card suddenly has nothing to do and is vastly overpowered for the engine.

So what do we do with a graphics card that is sitting around twiddling its thumbs?

Now we have room for improvement, assuming you can even imagine improving on photorealistic 3D at 25-30 FPS on the CPU alone. Stuff like astronomical resolutions for screens, ultra high fidelity, lots of GPU free to do more advanced physics and lighting calculations, and more. Instead of sharing those calculations on the GPU as an afterthought after the initial 3D calculations, now the entire card is free to unleash its full attention on it.

So the claim of Unlimited Detail is accurate, but it’s a contextual statement. Unlimited Detail, but you don’t need to load all of infinity at once to see it any more than you need to see the entire universe you live in to claim it has unlimited detail. All you can see is what is in front of you at any moment, and you take for granted that the rest of infinity exists because it’s there when you get around to seeing it.

Essentially, Euclideon engine works like the real world does. Everything is made of tiny atoms, and nothing exists except what you can see at any given moment at the level of detail you can actually see.

Polygons are a lot like a Classical Physics approach while Euclideon is the Quantum Physics approach.

Voxel Animation

Which brings us to the sticking point concerning the ability to animate point-cloud data. It’s not exactly easy, and it has come with a lot of ups and downs. Originally, it was thought to just be impossible and that was that, but we now know that’s silly because of the three points from Arthur C. Clarke at the beginning of this article. Eventually, somebody actually did manage to figure out a way to animate sparse-voxels but the conclusion was akin to the second stage of revolutionary technology statements:

Yes it can be done, but it’s not worth the effort.

With Euclideon, I’m going to say they figured out the animation aspects further and will progress into the third statement of revolutionary technology:

I said it was a good idea all along.

The people in this industry years ago who came out and said that point-cloud data was the future of the graphics industry were likely correct. The problem with being a visionary is that often times you make predictions that are completely accurate, but far ahead of their time.

Because the technology and methodologies hadn’t been figured out in a reasonable time from those visionary statements, the rest of the industry lost interest and said:

“See? It was just a false alarm. Nobody came out with it, so it must not be possible. Nothing to see here, move along. Point and laugh at the suckers who bought into this idea to begin with.”

So when a company like Euclideon comes out and says they figured it out, those same industry people who saw the original visionary statements years ago chime in again and say the same thing –

“Oh, this again? Didn’t we already conclude it wasn’t possible and move on? *yawn* Tons of people already tried this before and failed. Clearly Euclideon is a hoax, because if it was going to be accomplished, it would have been one of us with all of our training, expertise and money - not some guy in his basement programming in his free time. Since we haven’t figured it out, clearly this Bruce Dell guy isn’t able to.”

Do you know what that makes the rest of the graphics industry, with thinking like that?

Arrogant. That’s what.

Or, more likely, these are the people who have heavily invested in their current generation technologies and are subject to technology lock-in. With all of that invested in their own systems, you would obviously think every single one of them would come out against Euclideon as a hoax or impossible. It’s a biased viewpoint altogether, with absolutely every possible motivation for being biased that exists. So why are we listening to them in the first place if we know they couldn’t possibly have an unbiased view of this type of technology?

Jon from id Software says it’s not possible for a few more years. Notch from Minecraft says Bruce Dell is a snakoil salesman. Even the CEO of Epic Games predicts that photorealism in games won’t happen until between 2019 and 2024.

Those people all have one thing in common:

Each has a vested interest in Bruce Dell and Euclideon being absolutely wrong.

Bruce Dell sounds unprofessional

For a guy who has been coding the Euclideon engine since 2006 as a hobbyist programmer in his spare time, do you really think he’s going to come out and get a professional actor and marketing team to do the presentation?

Secondly, he’s Australian and that accent comes across pretty heavily. He really does talk like that normally… cut the man a break.

Does this guy know the terminology of the industry? Probably not nearly as well as most would. It doesn’t really matter if you know what he’s talking about as long as he knows what he’s talking about in his head. Just because he doesn’t know the word for it doesn’t mean he doesn’t know what it is.

We’re talking about a guy who wasn’t told this stuff was impossible, and went into coding a graphics engine from scratch with the thought that it was perfectly feasible and he just didn’t know how at that time. This likely drove him harder than most coders would for solving the problem.

Think logically for a moment… when you go to college and try to earn a degree in programming, your professor is teaching you things they studied and learned from other people in the industry. There is a bias already in what you are going to learn, because it is heavily geared toward teaching you the current practices and not encouraging you to try and invent new ones. Hell, even if you’re just learning from the latest publishing of GPU Gems, you’re learning from the accomplishments of others in the industry who are themselves following accepted practice by the book. Sometimes standardized education is far more poisonous than unconventional learning methods.

They aren’t looking to turn you into a creative, unorthodox, thinker… they just want a guy/girl who can churn out code in an existing industry that has a vested interest in specifically not drawing outside of the lines on the coloring book.

Bruce Dell wasn’t poisoned by that preconceived ideology atmosphere. He didn’t even realize the lines existed to begin with, and as such likely was free to create a masterpiece of coding.

So, Euclideon is not a hoax?

It’s not likely that Bruce Dell and Euclideon are trying anything funny. If anything they seemed to have figured something out that much (if not the entire) industry failed to do. All it took was thinking from left field and the ability to ignore all the people who failed before hand telling him it wasn’t possible.

It’s funny how that works out.

I’m not going to sit here and tell you that I know exactly how Euclideon works, but I can give a simplistic rundown on what I think is happening based on what Bruce Dell has already said. From my point of view, it seems to work out just fine, and has the potential to do exactly what he says it can do. Of course, I’m not a programmer either… so all I can do is give an analysis from a layman point of view. There’s plenty going on behind the scenes of this engine, and I’ve only simplified it as much as possible for a general audience to digest.

What does that mean for the industry as a whole?

Just what Bruce Dell said in the original Euclideon demo video below – Your graphics are about to get a whole lot better, by a factor of about 100,000 times or more.

When is this supposed to come out? That’s the number one question on people’s minds right now concerning this technology. Well, I can say that Bruce Dell made a comment concerning this if anyone was paying attention. He had a number in his head, and that number was about 16 Months, give or take polishing things up for a release.

He said that around August 2011, and 16 months from then is December 2012. Give or take, that means a near Christmas release for 2012, or Spring 2013 release. There’s a good estimate for you to shoot for.

If Bruce Dell is particularly a man of twisted humor, he’d release Euclideon as a demo on December 21st, 2012 (Last day of the Mayan Calendar). Something tells me that is a very likely date, because it’s the end of an era… and would be fitting. If Euclideon does exactly what he’s claiming it does, then it may as well be the Apocalypse for polygons, unleashing an era of photorealistic gaming and virtual environments.

Hi, could you say where you got the 16 months figure from? You may have got mixed up with this press release in September 2010:

ReplyDeletehttp://www.euclideon.com/news/13/

Really great article, gave me a lot to think about! :)

@Rax0983: It was in a random interview, but it's not a solid number.

ReplyDeleteMy actual estimate was (realistically) between 16 and 18 months which puts it at around Q4 2012 or Q1 2013 as an expectation. They tend to release things about once a year, and they were making a lot of progress last year. I've read and watched a lot of the interviews and such, so it's a jumble of knowledge and odd quotes I picked out from Bruce Dell through inference.

For instance, when he said "100,000 times more detail than current video games _not using procedurally generated approaches_" That was the clue that set me off on this article and piecing the technology together in a plausible manner.

Explained also about the search algorithm approach, and said it only pulls the atoms from the models that correspond to the pixels on screen - so I wrote up how that system would work out and what would have to happen to enable it.

That being said, no it wasn't from the 2010 press release that the 16 month number came to be used. It was from a 2011 interview where it was mentioned in passing, but dismissed in the same sentence. I think it was something like "I'd like to say 16 months, but..." and he added some other cover-up why it wouldn't be.

Which brings me to the 16 - 18 month timeline. He'd like it to be ready before Christmas 2012, but is acknowledging 2013 as the year of Euclideon because he'd also rather it be finished and really ready before it's let out.

So the earliest anyone should expect to see this is Q4 2012, and the latest I'd say is Q1 or Q2 of 2013 if they are *really* running behind.

Actually, the idea of 'disappearing' and not showing the current state of the project is a smart idea. How many peoples projects have been bought up or ensnared in red tape when they have shown that something can actually be done ? As long as there would-be competitors don't think it is possible they will probably leave them alone. I am hoping, actually, that they haven't given enough away already ?

ReplyDeleteI also wonder if there will be some decisions put on hold in the virtual world until they can see if it is real or just a pipe dream ? I can imagine all across the marketplace of virtual goods that instead of investing in the latest and greatest of current rendering engines that they may decide to shelve those ideas for a year or so, as there would be no point in investing that much in a current system when it might be completely irrelevant a year from now ?

@Lord I'm cautiously optimistic about all of this. From what I've been able to ascertain from interviews and videos that Bruce Dell has actually done, there have been quite a lot of subtle hints and blatant acknowledgments on his behalf, that, when taken individually are meaningless only to those who are intelligent enough to start making the connections.

ReplyDeleteI'd happen to say that as explained such far, it seems very plausible. The secret wasn't just in the rendering engine but also in the indexed point-cloud data models as well and how they are accessed by the engine at any given point. That being said, there are some hurdles still to cross such as the data compaction, as well as a few other things behind the scenes - but I would think at this point that if Bruce Dell is as confident as he already is, then they must have solved most of it already and just aren't telling anyone outright.

I agree that "disappearing" and not showing anyone the current state is a smart idea for exactly the same reasons you have stated. It's better to let the industry think you're a hoax up until the very moment you release version 1 to the public, by which point they'll know it's not a hoax but really won't be in any position to do anything about it. That would give Euclideon a massive head start as well as a virtual monopoly on streaming point-cloud methodologies.

As a geometer trying to make sense of the way the visual front end computes, I am looking further to learn about the math behind Unilimited Detail.

ReplyDeleteIt's terribly exciting to see Euclideon doing something which is considered by many impossible. Of course I won't be 100% convinced all of their claims are real untill I can play with the software myself, but I'm very hopeful.

ReplyDeleteI often try to find new interviews and just happened to find one today. You'll probably have already seen it but just in case you haven't, here's a link.

http://nextbigfuture.com/2012/05/euclideon-continues-to-make-progess.html

@079 I'm not entirely convinced myself, however I am very optimistic because we can at least say:

ReplyDeleteUnder these very narrow constraints this would be feasible as one method of accomplishing "Unlimited" detail. And as far as I continue reading from interviews like the one you've posted, I keep looking back at articles like this one and seeing how close I really am to explaining what they're doing. "Impossible" stays in quotes for me, because I do believe what they are doing is completely possible.

@chorasimilarity I'm pretty sure I heard Bruce Dell mention somewhere that the mathematical operations were reduced to mostly addition or subtraction in a highly optimized fashion.

ReplyDeleteSome simple questions

ReplyDelete1. How can we decide if the engine work well on dynamic stuff if the demo is 100% static?

2. If memory isn't an issue why are same few objects repeated endless in tiles?

3. Why is the homepage/facebook/youtube-channel dead and not updated since 2011?

4. Unlimited Details was first showcased 2003, how can we trust a company that never reveal any details?

I'll answer simply.

Delete1. Only the demo they showed for the games conference was static. Prior demos were not static. As stated multiple times by Bruce Dell, they had a limited amount of time to put together a demo for that event, and that's what they came up with on short notice. Just because the demos they showed that were not static are old does not mean that Euclideon suddenly had amnesia and forgot how to replicate those results today.

2. Ever try to create a square kilometer of three dimensional content in under 3 weeks without repeating anything and having access to a single artist? While this can be answered either pessimistically or optimistically, it's a matter of choice which you choose to run with. I'm leaning on benefit of the doubt because all virtual environments use repeated materials and models - it's a basic premise that is understood.

3. Because they have better things to do than appease half the world calling bullshit and another half praising them. Such as actually work on the engine and tools so they can make a release.

4. Being in the technology business myself, and a part of a number of projects, I can state that the most likely reasoning for not just coming out with the details and going hog-wild comes down to the most obvious answer: They aren't talking because they're filing the proper IP and Patent Protections ahead of time before they do. That takes a little while to go through (especially if they are filing a slew of patents, which they likely are).

4b. Showcased is a strong word for Euclideon/Unlimited Detail as of 2003. Keeping in mind that up until about 2011 it was a single handed hobby programmer - so I'd likely give more weight to Euclideon as a company effort and advancement than counting 2003 to 2011 when he was effectively on his own. Kept in perspective, from 2010/2012 they are making a lot more strides than Bruce Dell would have from 2003 to 2009/2010.

The bottom line is - Euclideon works more like a 2D engine than a 3D Engine, but because of perspective on a 2D screen, you wouldn't know the difference. That's really the underlying point and secret of what they're doing different.

The bottom line is - Euclideon works more like a 2D engine than a 3D Engine, but because of perspective on a 2D screen, you wouldn't know the difference. That's really the underlying point and secret of what they're doing different.

DeleteIs it not, in fact, the other way around? Instead of projecting things on the view plane they, instead, work in view space. View space is a quadtree (implying the view pyramid, its quadrant subpyramids etc. down to pyramids corresponding to pixels) while the object space is an octree. The octree is descended in the quadtree, in front-to-back fashion. Occluded octrees (none of their front faces has a subsquare in a free subpyramid: that's perhaps the relevant patent).

When I say it works more like a 2D engine, what I mean is - the file format itself for the point cloud data is indexed and then correlates to pixel screen space in 2D. The engine only asks up front for the individual point cloud pixels from the models that can correspond to the fixed 2D screen space while preemptively omitting the other 99% of the data through the search algorithm.

DeleteWhat you see, then, is the 3D view but it's really a trick of perspective in a 2D space allowing the Euclideon Engine to deal with photo realistic models without the bulk of the data to process.

Thanks (interesting post by the way, you moreover managed to reveal an Euclideon UD game!). As for 2-D, I think you're correct: the octree is a dimension-reducing device i.e., it's complexity in terms of number of subtrees is as the surface (smaller than the volume except fractal style things) of the object. One has the distinct impression that one will soon reverse engineer their tech. In fact there's a certain algorithm, having UD properties like freedom from *, / or floats, at most one point per pixel, involving object octrees & view 3-D quadtrees, that should be implemented by a good programmer. I'm working on it but summer heat is a problem.

DeleteI'd love to see what you come up with :)Keep me posted (if you would like). You can find me on Google+

DeleteHere's the algorithm: https://docs.google.com/file/d/0B0Tw1fnDScRsekJQRHg3LTB3eGM/edit?usp=sharing. If a good programmer were to implement this (e.g., a reader of this blog) then at least we would know what UD is not (should it be slow). In any case, I'm sorting out impl. details.

Delete@Will Burns

ReplyDeleteThe name "Euclideon" hints to some geometric operation.

My take is that this operation may be the dilation (scaling wrt a basepoint). Most of the algorithm could consist in manipulation of syntactic trees (nodes are dilations as gates with two entries, namely basepoint - point, and one output, namely dilation of basepoint applied to point; each node is decorated with the scale, a positive number) and rewriting rules based on algebraic properties of such dilations, like: they preserve basepoint, they are invertible, they are linear (therefore the dilation operation is self-distributive). Only after putting a syntactic tree in some normal form the expression is evaluated.

If so then I would be very excited, because that is what I do lately as a geometer, on spaces with dilations (in particular the Euclidean spaces are the most trivial example of such).

@chorasimilarity

ReplyDeleteI'm leaning toward the geometric operation as well, but I know Bruce Dell mentioned in passing that the algorithmic operations are reduced to simple addition and subtraction for speed & optimization reasons. The other idea I find interesting is the ability to ignore a majority of the data in a model, and only stream points that correspond to the pixel space on screen. It's a good idea, actually - Why treat it like a 3D calculation when you can pose it like a Streaming 2D calculation and ignore most of the input and CPU/GPU overhead up front?

@Unknown

You should try reading the Disclaimer at the top of the blog before you waste my time with nonsensical and personal assertions based on logical fallacy.

http://xbigygames.com/euclideon-still-alive-with-new-footage/

Delete"According to Dell, the problem with creating several features, such as physics, deformable environments, animation etc. is that current middleware is simply not built to function with the atomic structure"

Bruce keeps going with the excuses. Logic if you brag too much and give too much expectations. Time to get realistic.

Unfortunately your assertion still doesn't hold up, because it falls under a classic case of Post Hoc Logical Fallacy. I could have sworn I asked you to not use logical fallacies to make your case here, and even have pointed where to reference what ones to avoid?

DeleteWhat is Post Hoc: This fallacy gets its name from the Latin phrase "post hoc, ergo propter hoc," which translates as "after this, therefore because of this."

Definition: Assuming that because B comes after A, A caused B. Of course, sometimes one event really does cause another one that comes later—for example, if I register for a class, and my name later appears on the roll, it's true that the first event caused the one that came later. But sometimes two events that seem related in time aren't really related as cause and event. That is, correlation isn't the same thing as causation.

In your case, you make the Post Hoc fallacy on purpose, by omitting the rest of the cited statement and drawing false conclusions based on the out of context part you are using to support your idea.

Bruce Dell says it's a challenge, but did not say it wasn't possible nor had they not made progress in those scenarios. He did, however, state plainly that while such is a challenge due to current middleware assumptions that must be worked around, he is confident they will work around those issues.

"We would prefer not to talk about things until we are happy enough to show them." does not mean, as you assert, that they are wholly incapable of doing so. It means they likely already are but are not happy with the final output enough to make into a public demonstration.

From the article: http://bit.ly/KPNPhP

"In the 2011 “Island” demo we were shown a vast landscape with models created either from scratch or by scanning-in actual objects from the real world. What many criticised was the lack of actual animations found in the video. According to Dell, the problem with creating several features, such as physics, deformable environments, animation etc. is that current middleware is simply not built to function with the atomic structure. “Our animation efforts have been focused on the ability to convert animated polygon objects into unlimited detail objects with no lost information,” he elaborates. “Its quite difficult but I’m sure it will work in the end. As for physics, these are atoms not polygons so a lot of physics and deform-ability has to be recreated. We would prefer not to talk about things until we are happy enough to show them. The truth is we are working with converting movie quality animation and lighting from polygons to deformable point cloud.”

For future reference, please review: http://bit.ly/KPO3FG before making further comments. It is the handout from University of North Carolina explaining how to avoid using Logical Fallacies in your debate/argument which weaken your case or invalidate your claims. I'll be using this as a criteria to determine whether or not I should bother allowing another of your comments through.

Such far you've used just about every logical fallacy in making your point - Appeal to Ignorance, Appeal to Authority, Post Hoc, Ad Hominem, etc. and have yet to make a single compelling point which can be supported.

It must be very hard for you if you use that academic talk to everyone you talking to. What is right/wrong often ends up being subjective anyway. Discussions can be interesting regardless of that.

DeleteFor an everyday developer it still comes down to:

1. We don't have memory to store kilometers of detailed data and you have to sacrifice something to make it possible today

2. We don't have power to simulate millions of atoms in games and polygons solves that by working on mesh-level

2. Modern engines are flexible, all from dynamic lighting to complex bones animation, and Euclideon are not there yet, so this is not an alternative in near future.

It's fine if Bruce want to do research about future technologies but nobody gains something positive from his vague claims all the time.

You actually believe that they release something in december and things are like 100.000 times better. I don't follow your logic on this. If Bruce continue with excuses and no deadlines, you just think, well he's on right track and it just take even more time?

Nice! Another Ad Hominem :) For reference, that's when you attack the person and ignore the actual debate. Let's address each of your points - considering they all rest on Appeal to Ignorance, Ad Hominem, Missing the Point and Post Hoc.

DeleteWhat is right/wrong is subjective only when it's based on opinion and not facts.

1. Does a Blu-Ray DVD Player need to load the entire 50GB worth of 1080p HD movie up front in order to play it on your computer with 4GB of RAM? When you understand this point, you'll understand your arguments to the contrary have been inconsequential at best. By your logic and argument so far, you've proven that it's impossible to watch a Blu-Ray DVD. This is why you shouldn't use logical fallacies for your debate.

2. See also #1

3. Post Hoc: Because of something seemingly related but not, this also must be true. Euclideon are not there (yet) but that does not mean they will not be in the near future.

For your convenience, I added #3.

I believe that 100,000 times better is subjective to the context of the engagement. This is something I've mentioned multiple times, and explained in detail in this article. Every claim you've made to the contrary was and is explained already - in plain view for you to read here, in the most basic premise possible. For some reason you continually fail to grasp this very simple premise, and that puzzles me most.

DeleteI believe he's still on track because I actually understand what he's doing in that process. Since you're approaching this from the viewpoint of the every day developer, I'll use an appropriate analogy to make this clear:

Modern day graphics engines were built with the benefit of existing graphics libraries such as OpenGL and DirectX 11. These graphics libraries are pre-built routines for mathematical operations and calls to modern GPUs, and these libraries are pre-built for the modern graphics engine designer so they simply make a DLL call instead of re-writing the math from the ground up for each thing they want to do.

What Bruce Dell is doing does not have the benefit of using modern graphics libraries such as OpenGL and DirectX 11 to make assumptions. Where OpenGL and DirectX calls are the equivalent to using a high level programming language (such as C++) Bruce Dell is in a position where he cannot code with assumptions using those graphics libraries and must re-write each of those things a modern graphics engine developer takes for granted, and then write the translation so that the "modern" GPU can understand how to handle it.

The analogy then becomes the difference between writing a program in C++ (which is a high level environment and takes many things for granted) and writing a program in Assembly which assumes absolutely nothing.

When Bruce Dell said that modern GPUs aren't really built for atomistic engines, he's right - modern GPUs are built on the assumption that they are supporting either DirectX and OpenGL, both of which assume Polygon engines and related calculations, but there is no instruction set on those GPUs on how to handle something other than Polygon based systems unless the instructions are written from scratch. This also explains why Euclideon is currently optimized and running on the CPU entirely, and not yet touching a GPU. See what common sense and logic gets you?

So, you see, when you actually understand the situation at hand and the details involved, one does not simply make the assumptions and claims you have been making, because they seem really silly in hindsight.

Understanding that many parts of Euclideon (if not all) are Assembler operations which are re-defining graphics operations at the core in order to make up for the fact that modern graphics libraries and GPUs don't speak the language and favor Polygons entirely - then yes, he can take his time to figure that out and make progress.

(cont)

Unlike an "every day" developer - Bruce Dell and Euclideon are actually having to program from scratch, and re-invent entire graphics libraries without the benefit of cheating (using OpenGL or DirectX).

So yes, in context of the situation, Bruce Dell is right on time regardless of how impatient you may be.

@Unknown

DeleteI've omitted your latest comment on the premise of total absurdity on your part.

1. You don't have to load the entire level up front when you are streaming the points in relation to the pixels on screen and no longer dealing with the constraints of loading entire polygon models in entirety. I already covered this point, but you continue to assert that the constraints of the existing methodologies apply to something else out of context - apples and oranges.

2. I've already covered that it's not a limitation of computer hardware in my prior reply. You continually assume (erroneously) that the inefficiencies of processing are due to the hardware and not the software instructions controlling it. Above you will find that assertion already explained away - as it has been prior in detail.

3. The assumption of "hardware limitation" is entirely based on your original assumption of "unlimited detail in entirety" when I've already clearly stated that such wasn't possible. unlimited detail in entirety is not possible because it *would* require unlimited processing power to achieve, which we do not have. At no point have I stated it was unlimited detail in entirety but instead in pure context to what the end-user could discern, which is a wholly different situation than what you continually base your argument on.

4. At no point have I ignored the limitations of hardware in proper context to the situation at hand - in fact, I have taken that readily into account when make my validation of the subject and under those constraints, how such would be achievable via very clever coding and approaches.

In short, you are approaching this subject with the assumption that Euclideon is built within the constraints of existing polygon processing methodologies when it is not, and thus the inefficiencies and approaches will not transfer over. The limitation was the approach to the problem, not the hardware.

Graphics platforms such as OpenGL and DirectX make assumptions that do not apply to atomistic behaviors or the process by which a 3D scene is rendered. They assume the model is mesh and needs to be loaded in full up front before working with them, which is true of polygon models but not of the Euclideon engine.

In short, your arguments such far simply do not apply here.

I'll leave you with that bit of understanding, whether you grasp what is being said here or not is not my concern. Such far you are having a very hard time really wrapping your head around what is being said, so I'll leave you with whatever it is you wish to believe - because just like Euclideon, I really don't care if you choose to believe this or not. You are hellbent on disproving something based on existing practices when those practices do not apply - and no amount of explanation from my part is going to convince you otherwise.

dude, you destroyed that guy's argument and i love you for it :)

DeleteI try my best sometimes. I mean... I'm open for differential consideration, but not when the foundation is pulling stuff out of one's ass.

DeleteMay 31, 2012: Well look at this... Euclideon releases another video showing some progress.

ReplyDeletehttp://xbigygames.com/euclideon-still-alive-with-new-footage/

I'm not seeing anything repeated here.

I've seen this video a couple of days ago, but it doesn't seem to impress people as much as the earlier video's. It would've made a lot more impact if they'd shown thousands of copies of this same scene side by side running on a laptop. Or perhaps include the Island they've made before and place this setup within it. Afterall, it's not so much what they're doing but the efficiency of the proces which makes their software impressive and that doesn't come acros here as effective as could be. I'm guessing they didn't because a lot of people would start to criticize the lack of unique objects.

DeleteThe video looks like a test for data compaction and visual quality. ~76% Realism @ 32FPS. I would have to disagree about showing thousands of copies of the scene side by side, because then people would fall back to the argument of having to repeat everything because of memory limitations.

DeleteAs for me, this video is pretty impressive because it gives me a deeper understanding of what point Euclideon is at in their work. If you consider that they likely had to write this whole system (including the graphics pipeline) from scratch starting with Assembler, any programmer worth their salt would immediately understand the herculean task in front of Euclideon, as well as give Bruce Dell his props for getting this far instead of acting like a bunch of jealous haters.

We should be in the cheering section for Euclideon and not being pessimists. We're our own worst enemies when it comes to innovation and advancement - always holding ourselves and others back.

Will, great article. The Unknown poster you conversed with early, from reading his responses I actually know who that guy is. His name is Tomas Eriksson from the Facebook Page and hombacom on Youtube.

ReplyDeleteThe reason I know this, is because I've tried to explain these ideas to him multiple times, but he insists on repeating those same arguments without any numeric values to back up his claims. Also, he told me he's been a programmer for 15 years, no seriously, he did.

Nonetheless, the reason I'm posting this is to bring a few things to your attention (not including who the Unknown was).

1. What you, nor really anyone else for that matter has ever spoken about, is the fact that Euclideon may very well have created their own format. Like Autodesk with .FBX or Adobe with .PSD, the fact remains they could have built their own format.

I actually thought of this because of Red Cameras. You may not be aware of who Red Digital Cinema is, so allow me to explain. Red is the single most advanced digital camera company in the world, bar none. They did what no other large company ever accomplished.

But they did this a few ways. They not only built the most sophisticated camera, they also built their own format. Red uses what they call, .R3D as their format. The beauty of a Red Camera is that it shoots in RAW Format. RAW Files allow for the camera sensor to hold color information inside the file, with no color applied until it is later processed through another Red technology called RedGamma.

This color information is held (along with a few other things) inside what is called Metadata. The idea of only retaining color information is EXTREMELY PLAUSIBLE for Point Cloud. If Euclideon was able to replicate a similar approach, only 2.0376M pixels (1080p) would have to be processed, while all other color information stays dormant until used.

This would allow for not only amazing data compaction, but the ability to stream in color processing and not entire datasets. Also, by them creating a specific format, they could create it in such a way that 3rd Parties could implement it in standard 3D Packages down the road.

2. The other aspect I would like to bring up is File Size. Everything I'm about to say is completely speculative and could very well be deemed incorrect on MANY LEVELS.

Anyways, many people (including the Unknown) have stated numerous times, is that incredibly detailed objects would take up large amounts of storage. This may not actually be the case.

If you went into a 3D Program and loaded up the default sphere and made it 100 Polygons, then loaded up another window with the default sphere and this time, made it 1 Million Polygons. In my estimation, when converted to Euclideon's Format, both should be virtually identical in file size.

The reason I say this, is because both are converted equally. The conversion rate is 64 atoms per Cubic MM. Since both spheres are the same size, they are essentially made up of exactly the same amount of Cubic MM's. It's like, if you took both spheres and filled them with water. Both spheres use the same amount of water, but instead of water, think of it like atoms.

Again, that may be very incorrect, but from a mathematical standpoint, a measurement has no alternative but to break down accordingly. I believe the deciding factor in File Size will be what color attributes are applied. Whether they are 8 bit, 10 bit or 12 bit color might be what boosts file size. Other than that, in my opinion, the detail in the asset has no bearing on File Size.

**Also, one more thing. You are absolutely correct in saying that people who only worked in Polygon Fields are struggling with this. The beauty of Euclideon is that it shows how one-dimensional the world has really become. It's almost like everything that's been created can only be improved upon, nothing can be invented anymore. It's like we hit the pinnacle years ago, and all we have to do is polish it up more.**

Actually I did mention the idea that they are likely using a custom file format in the Procedural Methodologies section of the post:

Delete"Therein is the secret for Euclideon. The typical 3D Engine is the equivalent of having to travel from the beginning to the end of the Internet to find what it needs, whereas Euclideon is the equivalent of skipping 90% of it and bringing the results in milliseconds. This, in turn, makes Euclideon a highly optimized graphics engine, and the indexing of the files happens when you’re converting the high resolution polygon models into the Euclideon point-cloud data format (the equivalent of Google crawling the Internet)"

2. Algorithmic Interpolation was my thought on the data compaction methods.

But yeah, I follow what you're saying. Polygon methodologies are the ones having trouble wrapping their heads around this stuff because it seems to just break everything they've known.

Also, I think those points were made in more detail with the Quantum Rush and Quantum Rush: Duex

DeleteGreat article. About last paragraph though, I don't think this will be apocalypse for polygons, especially for games that are supposed to look "oldschool" (SuperOfficeStress and 0x10c to name few) where polygons are enough. Also cost of technology may be problem. If they release sdk for free, or go after open source business model and charge for support instead, technology will be adapted very quick. But if they'd demand $3000 (random big number) for license, that will limit technology adaptation.

ReplyDeleteI'm on the fence about that - I mean, Crytek charges $750,000 for a commercial license of their engine. I think it could go either way. As for the polygon part - Euclideon does atomistic cloud models, but that could be just about anything. Just because it can do photorealism doesn't mean it can't do less than that if you import the models. Just some food for thought.

DeleteAdoption will be very very quick no matter what they charge, at least in the gaming industry. Their graphics detail speaks for itself, it's a must have the day the first game is released.

DeleteFor the console and mobile gaming industry, this will suddenly put all these platforms on equal footing graphically.

The most easy to adopt and most profitable price model to charge, would be a percentage of profits made by any game that uses their tech. And considering the gaming industry is bigger than the movie industry, Bruce Dell is about to join the richest people in the world list.

The mainstream typically puts the cart before the horse and as long as the majority keeps looking at the wrong ends, while solutions are being developed behind the scenes, the pace of progress will be hindered.

ReplyDeleteNow, imagine if we combine the Euclideon engine with efficient, adaptive, autonomous, in short 'real' artificial intelligence. That would mean intelligent character behaviors, on-the-fly generation of new scenarios or environments, perhaps even more remarkable things. It could employ the liberated GPU. I know, that's impossible right? We would need extreme supercomputers to pull that off? Well, no - only if we stick with current mainstream methodologies and understandings of what "intelligence" is. It's truly a paradigm-shift issue. The human brain does not run on algorithms but on neurons, so all we need to do is to replicate neural networks and make plenty of them interconnected and "alive".

Stephen Thaler and his company Imagination Engines did just that. He started out pretty much the same way Bruce did, as a renegade to the established industry. Consequently he is not taken seriously by leading 'experts' and has a hard time convincing anyone (except the government and certain corporations that utilized this technology for years) that his systems can in fact accomplish what they do without any exorbitant supercomputer farms.

Bruce and Steve should work together to bring us straight into the future. If only more people would be visionary enough to direct their investments and energy away from the encrusted ways of doing things towards the more groundbreaking ones.

Taking a read of the http://imagination-engines.com website and it looks really intriguing. Of most interest to me is the Robotic Simulation Environments section - though likely for reasons which aren't inherently obvious.

DeleteYes, IEI and Euclideon paired together would be really interesting... thank you for the heads up!

Any comments about the morphing of Euclideon web page into Euclideon:Geoverse? Sure it looks less and less as a hoax (I never believed is one), but still no real clue about the math behind.

ReplyDeleteWell, I did expect them to come up with something far more tangible than just a quick demo before the end of this year - as stated above. so the first stage is just commercializing the UD engine for the spatial visualization industry.

DeleteOn its own, the UD Engine already brings the file sizes down to 5-20% of the original size. But then taking that further, the 3D Search function (like I mentioned in the article here) allows LIDAR data to be visualized in real time, where before that simply wasn't possible.

At this point, I believe the GeoVerse product is a step toward what they were doing before as a possible game dev kit, but that is likely to surface around 2013 (maybe first quarter?).

All in all, for anyone who has been running around saying this is impossible - well, they are now free to call Euclideon and request a demo.

Surprise there is no info about there geoverse product yet

ReplyDeleteSurprise! You neither read their PDF on the product nor have the balls to call them for an actual hands on demo as stated on the prior mentioned PDF.

DeleteThat would be too easy and the quickest way to put your ignorant bitching to rest, now wouldn't it?

Hello, can you make sense of this, related to "mass connected processing"?

ReplyDeletehttp://chorasimilarity.wordpress.com/2012/10/13/mass-connected-processing/

Mass connected processing is where we have a way of processing masses of data at the same time and then applying the small changes to each part at the end. This is likely due to the above mentioned methodology of point cloud search wherein we can search through a bunch of data (processing masses of data) and pulling only the points in view on screen via the 2D viewport and changing only the pixels on screen that are different(applying the small changes to each part at the end).

DeleteI hope this clears it up a bit :)

Wow, now is clear :)

ReplyDeleteI know there are comercial reasons for not disclosing the algorithm, but as a "pure math" researcher, I just want to know.

If you were to look at it above, you'd likely see what they are doing that is different. The Euclideon file format comes across as one where the individual points in the point cloud inside the file are indexed like a search engine would index sites. Additional points in between can be reduced or eliminated but replaced with procedural methods to infer more detail based on nearest neighbor points (like the scaling algorithms used in game emulators today). By this method, they can pre-occlude data before opening the file by stating in advance what points exactly it is looking for and telling the system to ignore the other 98% of the file in advance.

DeleteHi there again, here is my precise mathematical guess about how it might work.

ReplyDelete"Digital materialization, Euclideon and Fractal Image Compression"

http://chorasimilarity.wordpress.com/2012/11/15/digital-materialization-euclideon-and-fractal-image-compression/